EU Parliament Debates Digital Fairness Act Consultation

MEPs press the Commission on timelines, child protection, and closing loopholes on dark patterns and design.

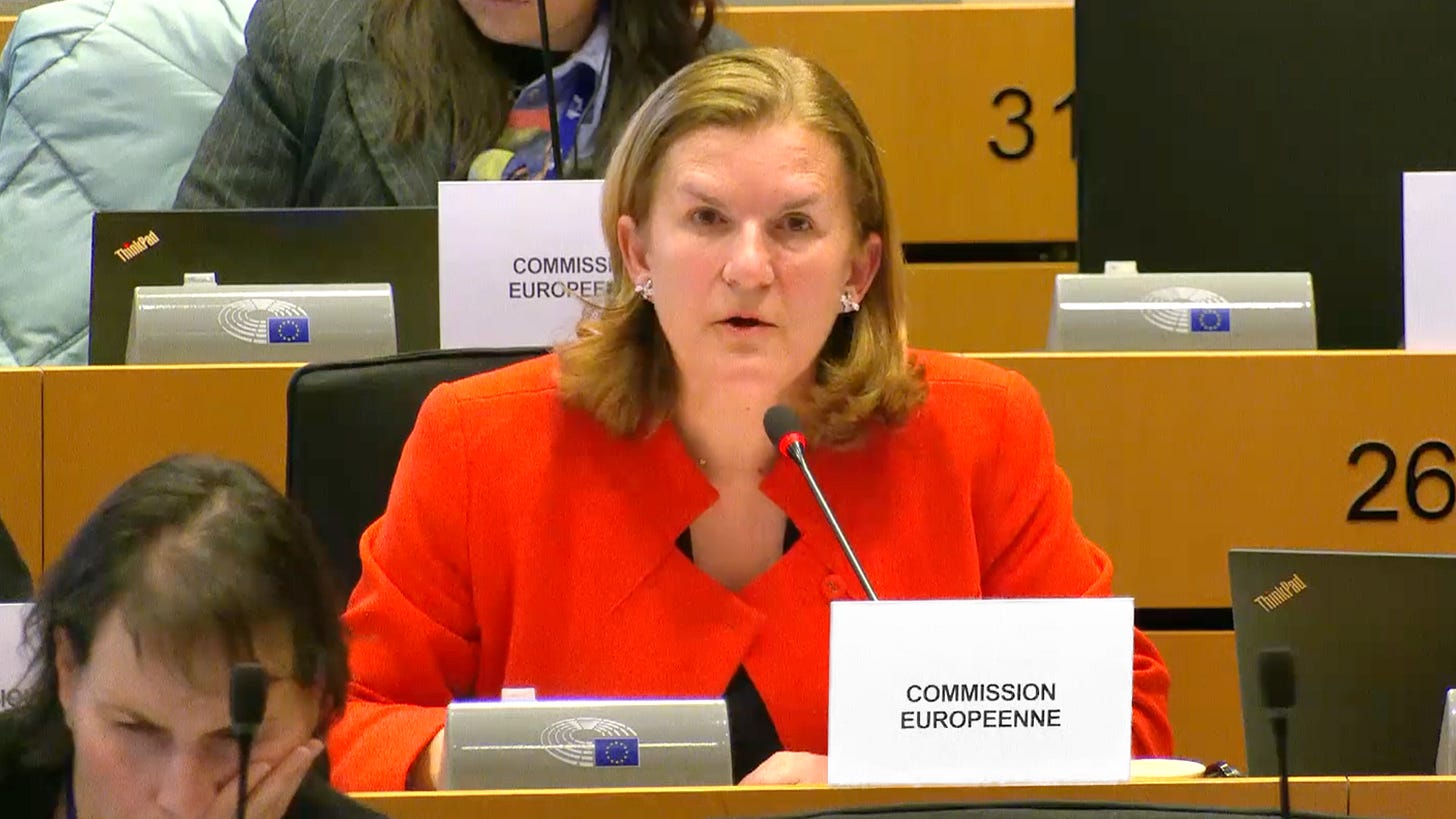

On 26 January 2026, the European Parliament’s IMCO committee meeting featured an exchange of views on the Digital Fairness Act with the European Commission to discuss the outcomes of the public consultation.

The Commission first presented the results, highlighting that 70% of consultation respondents supported binding EU intervention in areas such as dark patterns, addictive design features, unfair personalisation/influencer marketing, and other problematic digital product tactics. There was similarly “high support” for measures better protecting minors online, echoing the European Parliament’s own calls to fill gaps in these areas.

The Commission are considering measures on drip pricing, misleading dynamic pricing, and opaque price comparisons that can mislead consumers.

Protecting minors was stressed as “a priority for the DFA,” with ideas like switching off addictive design features by default for underage users and extending the DSA’s ban on targeted ads to minors across all online traders.

IMCO MEPs and Commission Q&A

Christel Schaldemose (S&D) pressed the Commission on the timeline, noting that a proposal only by Q4 2026 means new protections might not take effect for years. She urged faster action to “protect kids better,” given broad agreement on many measures. Schaldemose also asked if the DFA will incorporate age verification/age limits, a key recommendation of Parliament’s own report, and whether the Commission is considering stronger liability for platforms or their managers, since current fines can be shrugged off by big online players.

The Commission sympathised but explained that Q4 2026 is already a “super tight” schedule. It confirmed that age verification measures are on the table, the team is assessing the need for legal age limits (potentially using the European digital identity framework) as indicated by the public consultation. The Commission is also examining the idea of new liability provisions to ensure platforms truly comply with child-protection rules, although these complex options must be carefully analysed before inclusion in the DFA.

Kim van Sparrentak (Greens/EFA) argued that policymakers must choose whether to heed consumers and SMEs or cave to Big Tech interests, clearly hoping the DFA will prioritise the former. While supporting special protections for minors, she noted broad public support for bolstering general consumer protections (such as updating the EU’s unfair commercial practices list for the digital age). Kim questioned whether, legally, an “unfair commercial practice” can be prohibited only for minors but allowed for adults, since if something is harmful and unfair, it should be banned for everyone, regardless of age.

The Commission “heard [her] call” and affirmed it is determined to close gaps for all consumers, minors and adults alike, including vulnerable groups like the elderly or persons with disabilities. At the same time, it sees scope to go further in certain areas to better protect children online, and is looking at additional measures under the DFA for those crucial issues, while still maintaining baseline protections for all consumers.

Dóra Dávìd (EPP) cautioned against over-regulation. She noted that many stakeholder views on the DFA diverge, and warned that piling on new rules where existing laws already suffice could hinder innovation and confuse consumers. Dávìd asked what form the DFA will take, whether it will be an “omnibus” set of amendments to existing directives or a standalone act, and whether the Commission is leaning toward a directive or a regulation.

In response, the Commission said the format of the DFA is still under consideration pending impact assessment results. One option is amending existing directives, but if entirely new provisions are needed, the Commission prefers a regulation to ensure uniform rules across the EU.

Pablo Arias Echeverría (EPP) first stressed the need to better protect minors online in a way that avoids market fragmentation from divergent national laws. Second, he highlighted the flood of inexpensive imports from third-country e-commerce platforms (notably China), bringing unsafe products into the EU, asking if the DFA could help curb these unfair practices.

The Commission acknowledged both issues, affirming that a harmonised EU approach is needed to “avoid having 27 different rules” for online consumer protection, and calling the rise of unsafe products via foreign platforms a “huge issue” that the DFA will tackle alongside measures like customs reforms and DSA enforcement.

Laura Ballarín Cereza (S&D) asked how the DFA will ensure a coherent European framework that truly hits the platforms’ problematic business models without overlap or delay. Ballarín also pressed for EU action on influencer marketing (transparency and fair practices across borders) and cautioned against stifling Europe’s booming video game sector while addressing issues like loot boxes.

The Commission agreed on the need to protect minors online and to avoid fragmented rules (for example, on influencer marketing across Member States). It indicated that the DFA will target specific gaps, such as ensuring loot boxes and in-game purchases are transparent and comply with consumer law, but without over-regulating the sector, focusing on closing loopholes rather than imposing overly strict new burdens.

Sandro Gozi (Renew) asked what strategy the Commission will use to align the DFA with existing frameworks so that transparency and fairness requirements are not siloed or contradictory. Gozi further inquired if the Commission plans to add any new transparency rules for online video games, an area of concern for consumer protection.

The Commission assured that it is reviewing “all existing legislation” to ensure the DFA complements the current acquis (including the DSA and upcoming AI rules). It emphasised that the DFA’s mission is to fill remaining gaps, using imaginative solutions to cover unfair practices that slip through today, and to dovetail with parallel efforts (like enforcement actions and a strengthened Consumer Protection Cooperation regulation) so that no loopholes persist, even in sectors like gaming.

Gheorghe Piperea (ECR) raised the issue of “irrational purchases”. Piperea noted that no current EU or national law addresses the fact that dark patterns and manipulative designs can push consumers into irrational, emotionally driven purchases. He urged the Commission to consider tackling these psychological manipulations in the DFA.

The Commission acknowledged the concern, stating that this phenomenon is exactly why the Commission is conducting an evidence-based review of existing laws and evaluating what new rules might be needed in the DFA to curb such exploitative practices.

Leïla Chaibi (The Left) pressed the Commission on its stance amid opposing pressures. On one side, consumers (and Parliament) clearly want stronger binding rules, while on the other, “Big Tech” prefers a laissez-faire approach and she warned that past consumer-law packages seemed to favour industry interests over the public. Chaibi asked pointedly, “Who is the Commission going to listen to?” in drafting the DFA. She also challenged the Commission with two specifics: first, will it support an outright ban on exploitative practices like loot boxes in online games, which many consider predatory, or yield to the gaming lobby’s preferences? Second, given France’s new law on influencer marketing, will the DFA introduce an EU-wide definition of “influencer” and require uniform transparency (so influencers must clearly declare paid promotions), potentially even banning certain promotions (e.g. for cosmetic surgery or risky financial products) across Europe?

The Commission responded that it “listens to everybody, both sides” and finds it crucial to hear consumers’ voices about the problems they face. As an example, the Commission noted concerns about minors in “free-to-play” games who end up spending money on in-game purchases with insufficient transparency. The Commission indicated it is aware of such issues and is looking at greater transparency requirements and protections in areas like gaming and influencer content, while balancing input from all stakeholders.

Dr. Mark Leiser on Systemic Deception

A critical theoretical contribution to the DFA debate has come from Dr. Mark Leiser, a legal scholar who argues that the Commission must look “beyond the screen”. In an extensive letter to Commissioner Michael McGrath, Leiser posits that the concept of “dark patterns” has reached the limits of its analytical usefulness.

Leiser argues that dark patterns implicitly locate unfairness at the level of the “interface”, the button, the disclosure, the flow. He suggests that the most consequential forms of manipulation today are “architectural” and “systemic”. This distinction is critical for the DFA’s long-term effectiveness. While interface-level fixes (like mandatory cancellation buttons) improve the consumer experience at the margins, they do not address the “optimisation logic” that produces them.

Leiser warns that if the DFA only catalogues prohibited dark patterns, platforms will “comply at the surface and compete below it,” shifting manipulation more deeply into ranking algorithms and monetisation architectures. He urges the Commission to treat the DFA as an opportunity to regulate the “manufacture of choice” rather than just the “presentation of choice”.

The Live Events Crisis: A Call for Ticketing Reform

A coalition of over 120 artists, managers, and venues has written to Commissioner McGrath to request that the DFA specifically address “fraudulent practices” in ticket resale.

The coalition argues that e-commerce platforms continue to facilitate “industrial-scale illicit marketing,” where tickets are sold before they have even gone on official sale. A survey of FEAT (Face-value European Alliance for Ticketing) members found that efforts to report illegal listings frequently go ignored; in one instance, 296 listings covering nearly 1,000 tickets were reported, with only a single response from the platform.

Minor Protection and the Expert Panel on Age Controls

An expert panel on age verification for social media is taking shape to support the DFA’s development. According to a Commission invitation letter reported by MLex, the panel will consist of about 20 specialists (scientists, physicians, academics, and child-safety advocates) assembled to advise on social-media age restrictions. This group, expected to start work in early 2026, will explore how to implement age checks and parental control mechanisms in a privacy-preserving and effective way. Its recommendations could feed into the DFA’s provisions on protecting minors online.

The Commission invited the following experts: Leanda Barrington-Leach, Antonia Torrens, Sonia Livingstone, Servane Mouton, Jutta Croll, Lauren Mason, Leo Pekkala, Kimmo Alho, Christine Bauer, Matthias Brand, Jeff Hancock, Pavel Kordík, James O’Higgins Norman, Brian O’Neil, Maria Soledad Pera, Piotr Toczyski, Patty Valkenburg, Alexandra Weilenmann, Elena Bozzola, Hanne Mari Schiøtz Thorud.

Digital Platforms Summit 2026

At the end of February, a major industry-policy forum will look into upcoming DFA issues. The Digital Platforms Summit 2026 (25 Feb in Brussels, hosted by CERRE) is set to explore how the DMA is reshaping markets and anticipate the forthcoming Digital Fairness Act. The agenda includes high-level panels on consumer protection by design, dark patterns, addictive design & personalisation, and child protection, exactly the topics dominating the DFA debate. Notably, the Commission’s Isabelle Pérignon (DG JUST) will appear in a fireside chat, and former EVP Margrethe Vestager is giving a keynote. Stakeholders can thus get a preview of the DFA’s direction and discuss enforcement challenges ahead of the proposal.

Stay in Touch

As always, feel free to reach out on LinkedIn or at james@edpi.eu if you have any requests, questions, or just want to say hi!